December 17, 2025

The Agile Manifesto is now over two decades old. In that time, Scrum has become the dominant methodology in software development. It promised us working software, customer collaboration, and responding to change.

What it actually delivered?

For many teams that try to adopt Scrum, a different kind of dysfunction.

This isn't a critique of agile principles, those remain good principles to work by. Rather, its a critique of agile in practice: the events that become rituals, the documentation that never gets written, the feedback loops that close too late, and the estimation theatre that everyone knows is fiction.

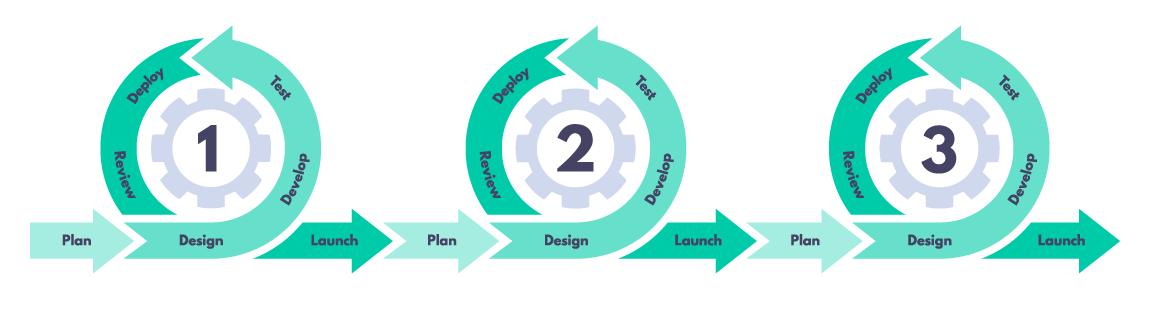

At GovMetric, we've stopped pretending these problems will solve themselves. Instead, we've built an AI-enhanced SDLC that addresses the weaknesses in agile adoption.

1. The Myth of Self-Organising Teams

What agile promised: Teams would organise around the work naturally. The right people would step up. Collaboration would emerge organically.

What actually happens: Knowledge silos form. Team members become bottlenecks. When someone leaves, months of context and system knowledge walk out the door. New team members take quarters, not sprints, to become productive. The "bus factor" is a constant, unspoken anxiety.

The AI fix: Codebases have always been a critical source of institutional knowledge. Context doesn't leave when people do - it's embedded in the code and accessible to AI to explain it to us.

2. The Estimation Theatre

What agile promised: Story points and planning poker would give us realistic, shared understanding of work complexity.

What actually happens: Half the estimates are question marks. Developers who haven't seen the relevant code guess wildly. Teams develop learned helplessness - "we're always wrong anyway, so why try?" Velocity becomes a vanity metric that measures everything except actual predictability.

The AI fix: Features can be decomposed against the actual codebase. AI assesses complexity by examining the code that will need to change.

3. The "Working Software" That Nobody Wanted

What agile promised: Frequent delivery of working software would ensure we build the right thing through tight feedback loops.

What actually happens: Feedback comes at sprint review—after two weeks of building. The demo reveals misalignment. Stakeholders say "that's not quite what I meant." Course-correcting mid-sprint is politically difficult. Re-doing work in the next sprint is demoralising and damages velocity.

The AI fix: Rapid prototyping enables stakeholder feedback before developers commit time. The feedback loop tightens from weeks to hours.

4. The Documentation Debt

What agile promised: "Working software over comprehensive documentation" was about priorities, not absence. Teams would still document what matters.

What actually happens: Nobody documents anything. Release notes are copy-pasted Jira titles. Test plans are incomplete or missing. User guides are out of date the moment they're written—if they exist at all.

The AI fix: Documentation is generated from changes. It's no longer a tax on development—it's a by-product.

5. The LGTM Code Review

What agile promised: Peer review would catch bugs, spread knowledge, and maintain quality.

What actually happens: Reviews are a bottleneck. Senior developers are overwhelmed. "Looks Good To Me" becomes the default response. Style issues dominate while logic errors slip through.

The AI fix: AI reviews every pull request first. Human reviewers can focus on functionality and intent rather than syntax and formatting.

6. The Jira Ticket Problem

What agile promised: User stories would capture requirements in a way that conveyed intent and context.

What actually happens: Jira tickets become task lists. "Add button to dashboard." "Fix the thing." Requirements that take three days of back-and-forth clarification before anyone can start work.

The AI fix: Product teams use AI to articulate full-bodied requirements and generate clickable prototypes that communicate intent far better than a paragraph ever could.

7. The Invisible Technical Debt

What agile promised: Sustainable pace and continuous improvement would keep the codebase healthy.

What actually happens: Technical debt accumulates silently. Pressure to deliver means shortcuts become permanent. Security vulnerabilities hide in corners. Test coverage erodes.

The AI fix: Regular AI-driven audits surface debt, security issues, and testing weaknesses—lightweight and continuous, not expensive quarterly exercises.

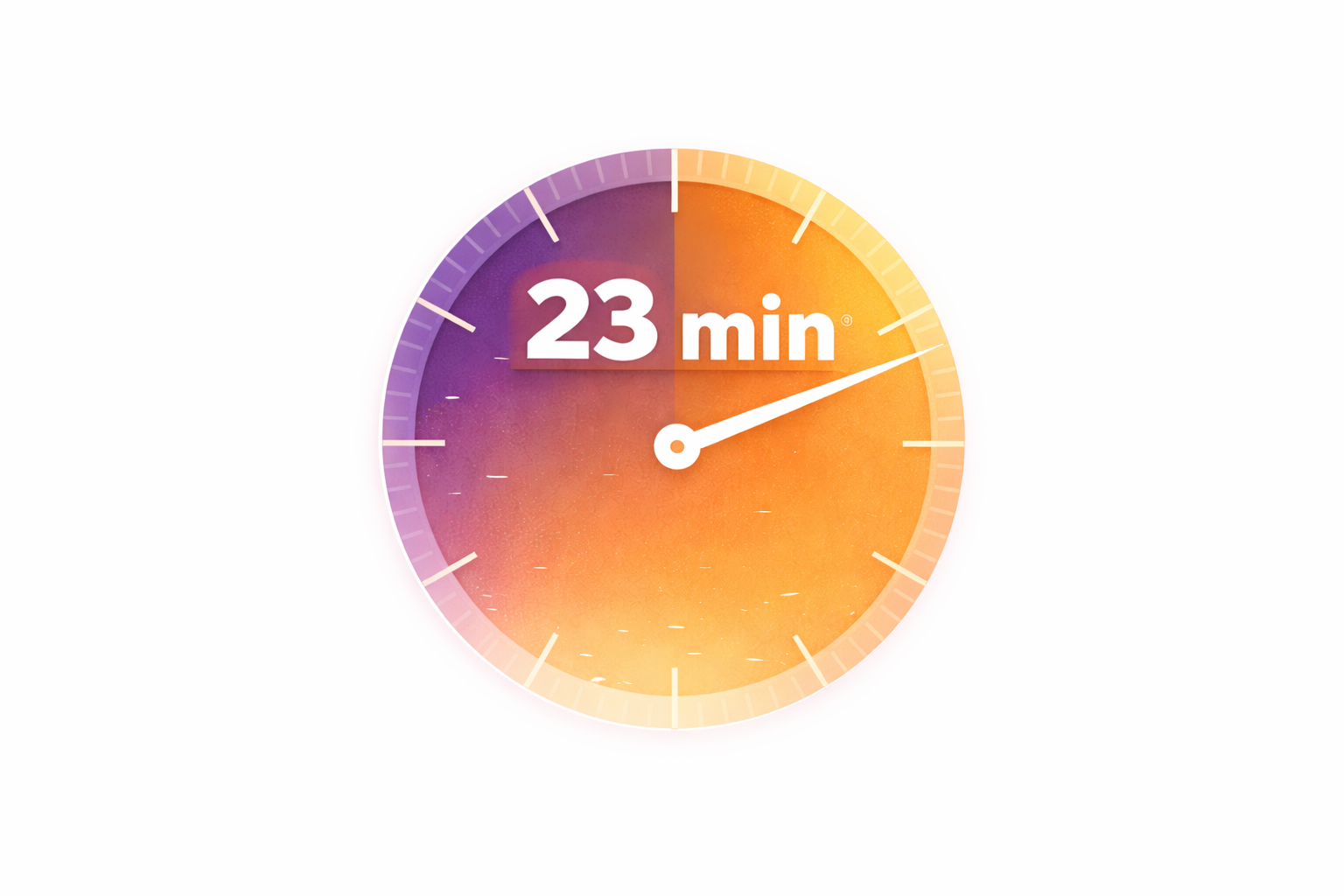

Beyond these specific failures lies a structural issue agile never solved: the constant interruption of deep work.

Developers in Scrum environments face daily stand-ups, sprint planning, backlog refinement, sprint review, retrospectives - plus ad-hoc questions from colleagues, clarification requests, and "quick syncs." Each interruption has a cost: research suggests it takes around 23 minutes to return to deep focus after an interruption.

AI provides an alternative path for many of these interruptions:

• Instead of tapping a colleague: Query the codebase directly. Both developers stay in flow.

• Instead of pair programming overhead: AI provides many of the benefits of pairing without requiring two developers on one problem.

• Instead of waiting for reviews: AI provides immediate feedback, reducing blocking time.

The result isn't developers working in isolation. It's developers who interrupt each other only when human judgment genuinely adds value.

The broken promises above aren't abstract. Here's how we've addressed each one with specific tools and practices.

Before Development

Validating Ideas — ChatGPT, Gemini, Claude, Perplexity

The product team stress-tests new product and feature ideas with conversational AI before a single line of code is written. They explore market fit, identify potential objections, research competitors, and refine the concept. We've killed bad ideas early and refined good ones faster than traditional discovery ever allowed.

Writing Requirements — Lovable, Cursor, ChatGPT

Our product team uses AI tools to articulate full-bodied requirements. They can pass along vibe coded, working prototypes. The development team receives work that's actually ready to be built. "What did you mean by this?" becomes a rare question, not a daily frustration.

Estimating Work — Cursor (Ask and Planning mode)

We interrogate the codebase during planning. New developers can understand existing systems in days, not months. We decompose features into deliverables and deliverables into tasks. The breakdown that used to take a meeting now takes minutes.

During Development

Exploring Unfamiliar Code — Cursor

When a developer doesn't understand how something works, they ask the codebase directly—query existing code, understand patterns, trace logic flows—all without disturbing a single teammate. Everyone stays in flow.

Rapid Prototyping — Cursor (Agent mode)

We prototype quickly—rough working versions that we can put in front of stakeholders early. The feedback loop tightens. We build the right thing the first time, more often.

AI-Assisted Development — Cursor (Ask and Agent modes)

Developers use Ask and Agent modes as a thinking partner: rubber-ducking ideas, catching mistakes, exploring alternatives. It's pair programming without pulling someone away from their own work.

Generating Documentation — Cursor (Agent mode)

We use agents to generate documentation from our changes—release notes, user guides, and more. The output is often cleaner and more comprehensive than what most developers would produce under time pressure.

Code Reviews — CodeRabbit

CodeRabbit reviews every pull request before a human sees it. The feedback is detailed and consistent. When we do ask a human reviewer, they can focus on functionality and intent rather than style and syntax.

QA and Testing — Cursor (Agent mode)

We use Cursor to build manual test plans, which form part of our documentation. Beyond manual testing, we use agents to assess and address weaknesses in our unit testing, integration testing, and end-to-end testing. Coverage gaps get identified and filled systematically rather than discovered in production.

Working Outside Comfort Zones

All of the above adds up to something bigger: developers are more willing to work in unfamiliar territory. Need a backend developer to fix a frontend bug? Need someone to write a Python script when they've spent years in C#? AI provides guardrails. The team becomes more flexible.

After Development

Investigating Bugs — Cursor (Ask mode)

Agents help with investigation—tracing through code, suggesting hypotheses, identifying potential causes. Honestly, this is the weakest use case we've found for AI. Agents still struggle with the kind of lateral thinking that debugging requires. But even a partial assist saves time.

Auditing the Codebase — Cursor

Our CTO uses AI to audit the codebase, generating reports on debt, security issues, and testing weaknesses. These reports feed back into the backlog—or, for simpler issues, directly to agents for resolution. What used to require expensive external audits is now a regular, lightweight practice.

Framework Upgrades — Cursor (Planning and Agent mode)

AI agents make upgrades incremental. We tackle them in smaller, manageable pieces. The risk is distributed. We stay current instead of falling behind.

None of this is about replacing developers. It's about finally delivering what agile always promised but never quite achieved.

Modern Scrum, in practice, means under-specified tickets, interrupted developers, late feedback, missing documentation, and superficial reviews. These aren't failures of individuals. They're structural problems with how agile actually works in real organisations with real constraints.

AI doesn't fix bad process. But it makes good process achievable - even for teams without infinite time or perfect discipline.

At GovMetric, we've stopped accepting agile's broken promises as facts of life. We've built an SDLC where AI fills the gaps, developers stay focused, and software actually gets delivered the way we were always told it would.

The Agile Manifesto was right about the principles. We just needed better tools to achieve them.

________________________________________

Curious how your team could work this way? Get in touch.